My experience with LLMs in education, as a student

Happy holidays! This is part of a series of notes detailing my college experience. I originally entered the Fowler School of Engineering as a computer science major in fall 2022, and switched to software engineering in spring 2023. I’ve technically reached all my graduation requirements now, but still plan on walking with the class of 2026. In the meantime, I thought it might be good to look back on the experience.

To put this note into perspective, ChatGPT released on November 30th, 2022. Excluding a few months, my graduating class at Chapman University was the first to have easy access to LLMs for almost the entire college experience.

Putting aside the safety concerns and dubious copyright ethics, I think LLMs can sort of be a learning tool. There is some improvement in efficiency to being able to ask Claude a question and getting an in-context response. I recall using LLMs myself for theory-heavy classes like programming languages and algorithm analysis, to explain or rephrase terminology in a more familiar manner. Features like ChatGPT’s “study mode” have pre-prompted the model to act more like a tutor, providing step by step guidance but not giving the user the whole answer.

There is no doubt in my mind that LLMs can make learning more efficient, but is that really what we want from education? Putting it in the context of my classes, would I have learned more had I not used ChatGPT or Claude to explain a concept? What would I have done instead? Probably ask the professor for help. It was my personal experience that I started going to office hours less because of LLMs, which is a bit disappointing on my part because I’ve learned many other insights from going to office hours in the past.

In my experience, the reaction to LLMs from professors and faculty in the Fowler School of Engineering was mixed. To this day, some professors require citing code assistance using something similar to the following (contrived) example:

#include <string>

#include <fstream>

#include <iostream>

using namespace std;

int main() {

/* Begin assistance from ChatGPT: How do I read in a file line by line in C++? */

ifstream file("hello.txt");

string line;

while (getline(file, line)) {

cout << line << endl;

}

/* End assistance from ChatGPT */

return 0;

}

(This example was fully written by me, and not, in fact, generated by ChatGPT)

Those same professors would subsequently ban AI assistance on quizzes and exams, in the same way you can’t use search engines or notes.

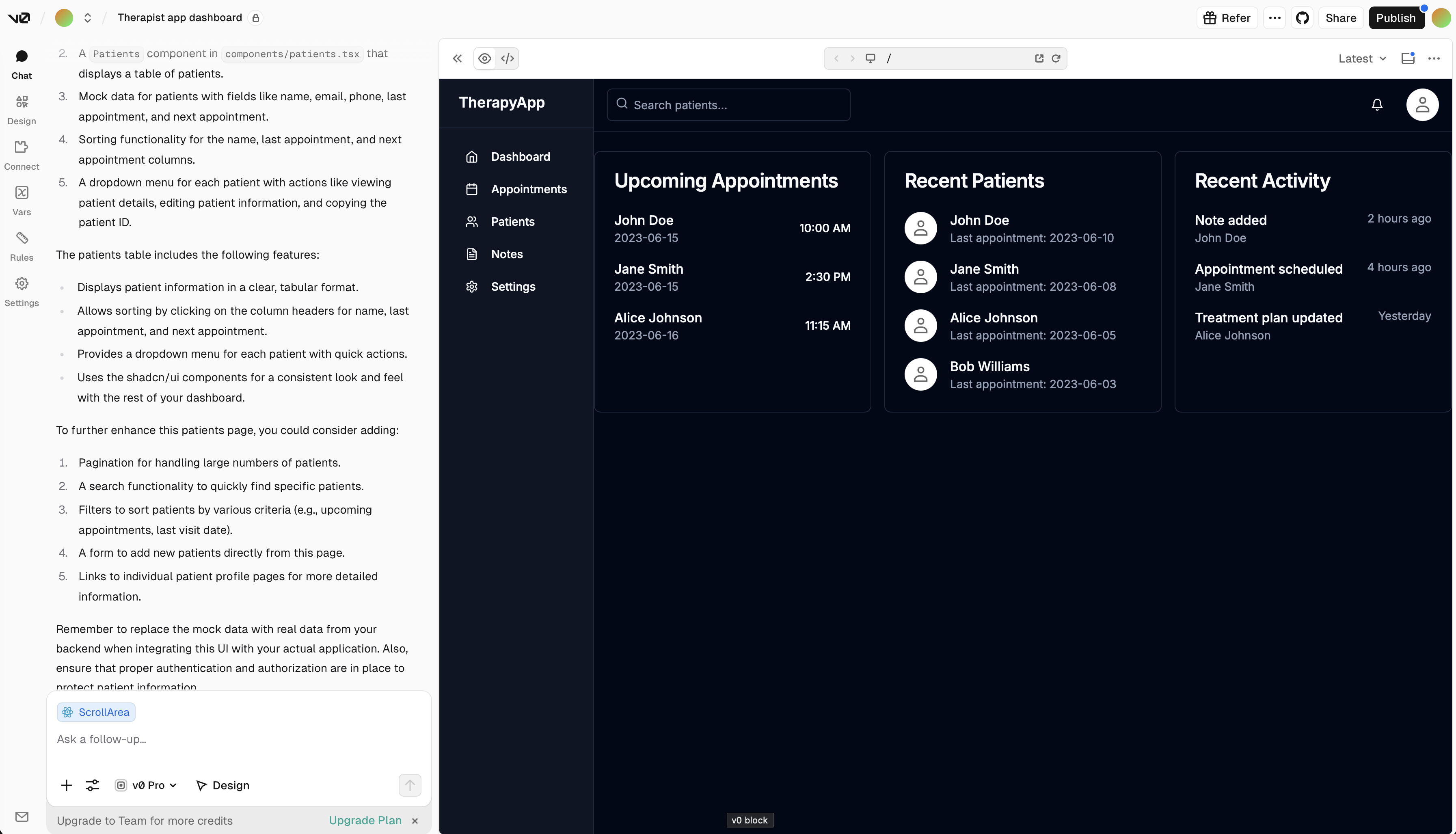

Other professors fully embraced AI. For software engineering at Chapman, there is a separate track of classes focused on engineering methodologies, software design patterns, and other topics. In one class, I remember getting full points for an assignment where all I did was submit a v0-generated design along with some LLM-generated documentation. To be clear: the professor encouraged this, and I was fully transparent with how I used AI to do the assignment. Subsequent assignments were the same, and even the provided instructions and templates had clear tells of being AI-generated.

In retrospect, I think I experienced the vicious cycle that AI can bring to education: the educator generates assignment details with AI, students’ submissions are AI-generated, and the educator likely reviews and grades submissions with AI. With how inherent gender and racial biases are in current-generation LLMs, this cycle has the potential to discriminate against women, people of color, and other groups underrepresented in technology.

All of this to say: as a student, one does not need to look to the industry to see the consequences of vibe coding; those consequences are probably happening right in front of you. Between seeing students using it for projects, to LLM-generated assignments by professors, my college experience was significantly altered by this technology.